DuckDB Insert Benchmark

We want to compare three methods of inserting data to a DuckDB table:

- Individual Inserts

- Individual Inserts - Wrapped in a commit

- Batched Value Inserts

For our test we are going to be running via JDBC driver as we want to test the DuckDB performance for QStudio. The numbers are so significantly different that the principle should apply to all DuckDB instances.

Benchmark Code

Individual Inserts

Batched Inserts

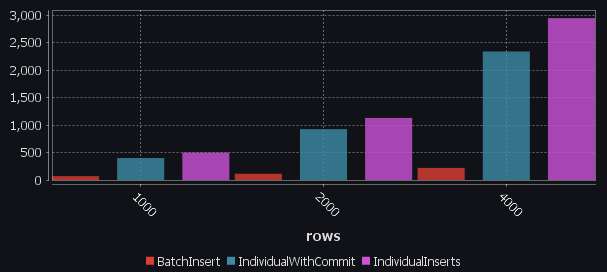

DuckDB Benchmark Results

Table shows the milliseconds taken for inserting the given number of rows into an in-memory table for each approach.

| rows | DuckDB IndividualWithCommit (ms) | DuckDB IndividualInserts (ms) | DuckDB BatchInsert (ms) | H2 BatchInsert (ms) | ColumnarDB Individual (ms) |

|---|---|---|---|---|---|

| 1000 | 400 | 500 | 70 | ||

| 2000 | 926 | 1130 | 114 | ||

| 4000 | 2340 | 2948 | 220 | 51 | |

| 20000 | 1150 | 123 | 450 | ||

| 40000 | 2264 | 240 | 800 |

*Note H2 is a java embedded database. ColumnarDB is an enterprise column-oriented database.

Graph showing Insert Time-Taken. Smaller = Better

Observations

- Batched Inserts are 10x faster, even on small data sets.

- Batched inserts scale sub-linearly, Individual Inserts do not. i.e. The larger the number of inserts, it gets slower per insert.

Open Question, is there a size limit for batched inserts?

I tested 40,000 inserts which ran in 2264ms.

Should also try the appender.